Creating an Internet Uplink Dashboard Technical

Part 1: Thinking it up

I usually go to Drexel DragonLAN's regular LAN parties just to hang out for the night, work on code, browse reddit, show off Windows 8, sometimes record and edit video. When I game, it's to build something awesome with Minecraft or display my 1337 n00b1shn355 at Team Fortress 2.

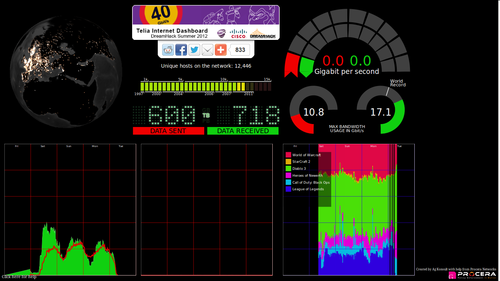

Hence, whenever I'm at a LAN, my programming brain think of how much stuff could be improved or done differently if there was software written to automate it. There's always the obvious registration and communication system, and we've been toying with the idea for a while. But recently I thought about data aggregation. I came across Dreamhack's Internet Dashboard and decided it would be a pretty fun thing to recreate and play with.

Telia's dashboard was run by a respectable stack of equipment, and developed with help from Cisco and various other names. So what if I try remaking it with some interpreted languages, a random midtower with some PCI-e gigibit-ethernet cards, and some Linux tools?

I have only done a little bit of experimenting with the idea, but hopefully I'll take the time and get a full-scale test going. The end goal is to run a large (64-man, maybe) LAN party through my interface and provide a realtime dashboard of what's going on at all times, without the players' uplinks at risk.

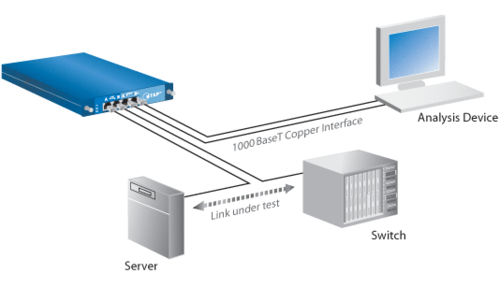

Some preliminary googling turned up some nice-looking but very pricey "network tap" devices, running close to the thousands for a decent-looking model. It seems like 10/100Mbps ethernet is insanely easy to tap with some wire crossing, and cheap commercial taps exist, but 1000Mbps ethernet is much more complicated than that and requires some active logic in the tap. Buying a tap would be ideal, but we're all college students here.

Part 2: Tapping the wire

What are the other options? My first instinct was to turn to a nice *NIX-based box to sit in the tap position. In order to keep the network going at a nice speed, the box would dual two gigabit ethernet cards; to send the tapped traffic out, it would need another one or two cards. Too bad quad-NIC gigibit cards seem to run in the couple-hundred-dollar range. Alternatively, the network tap box could run the statistics on the packets so it only needs a 100Mbps port to connect to the webserver box (aka my laptop); at that point, it could also just use one of the main interfaces to send the data out, too. Essentially the two main ports would be bridged together using the Linux brctl system, probably using a command sequence like:

# brctl addbr br0

# brctl addif br0 eth0

# brctl addif br0 eth1

# ifconfig eth0 0.0.0.0

# ifconfig eth1 0.0.0.0

# ifconfig br0 up

# dhclient br0

Though I didn't test it yet, that script should connect the two ethernet cards together, while still giving the box an IP address so I can connect to it and possibly use it for traffic diagnostics if I feel safe with my script and the amount of traffic the box can take.

Now that I have a Linux-based network switch, I need to add tap functionality so I can pull packets off the wire. Past experience told me to play with Wireshark, and I quickly came across the dumpcap program, which intercepts an endless stream of packets off the wire and writes them to file. But just to be annoying, dumpcap refuses to write to either standard output or an inter-process pipe (FIFO) file, so I ended up needing to create a PTY (psuedo-terminal) for it to write to so the data went to me and not to disk.

Once I had the stream going in to my Ruby script, I decided I needed to split up the work, so I buffer packets and send the full buffers off to a roundrobin of Node.JS-based packet parsers. The final load-balancing script is on Github and is able to process packets at around 1.8Gbps on my relatively-weak i7 laptop. The Node.JS parsers pull MAC, IP, port, and other such info out of the packets, tally them up, and ship the totals off to an aggregator via a RabbitMQ message router.

When I get back to Drexel, I'm going to try sourcing the hardware to run some tests; and if everything looks good, it's time to work on a nice fancy interface with realtime goodness that can be projected at the event and browsed by participants. When I get further with this, I'll continue with a Part 2.

If you have any tips or tricks for me that you think may be useful, please comment on this post.